Headlines

On February 13, the Wall Street Journal reported something that hadn’t been public before: the Pentagon used Anthropic’s Claude AI during the January raid that captured Venezuelan Leader Nicolás Maduro.

It said Claude’s deployment came through Anthropic’s partnership with Palantir Technologies, whose platforms are widely used by the Defense Department.

Reuters attempted to independently verify the report – they couldn’t. Anthropic declined to comment on specific operations. The Department of Defense declined to comment. Palantir said nothing.

But the WSJ report revealed one more detail.

Sometime after the January raid, an Anthropic employee reached out to someone at Palantir and asked a direct question: how was Claude actually used in that operation?

The company that built the model and signed the $200 million contract had to ask someone else what their own software did during a military attack on a capital city.

This one detail tells you everything about where we actually are with AI governance. It also tells you why “human in the loop” stopped being a safety guarantee somewhere between the contract signing and Caracas.

How big was the operation

Calling this a covert extraction misses what actually happened.

Delta Force raided multiple targets across Caracas. More than 150 aircraft were involved. Air defense systems were suppressed before the first boots hit the ground. Airstrikes hit military targets and air defenses, and electronic warfare assets were moved into the region, per Reuters.

Cuba later confirmed 32 of its soldiers and intelligence personnel were killed and declared two days of national mourning. Venezuela’s government cited a death toll of roughly 100.

Two sources told Axios that Claude was used during the active operation itself, though Axios noted it could not confirm the precise role Claude played.

What Claude might actually have done

To understand what could have been happening, you need to know one technical thing about how Claude works.

Anthropic’s API is stateless. Each call is independent i.e. you send text in, you get text back, and that interaction is over. There’s no persistent memory or Claude running continuously in the background.

It’s less like a brain and more like an extremely fast consultant you can call every thirty seconds: you describe the situation, they give you their best analysis, you hang up, you call again with new information.

That’s the API. But that says nothing about the systems Palantir built on top of it.

You can engineer an agent loop that feeds real-time intelligence into Claude continuously. You can build workflows where Claude’s outputs trigger the next action with minimal latency between recommendation and execution.

Testing These Scenarios Myself

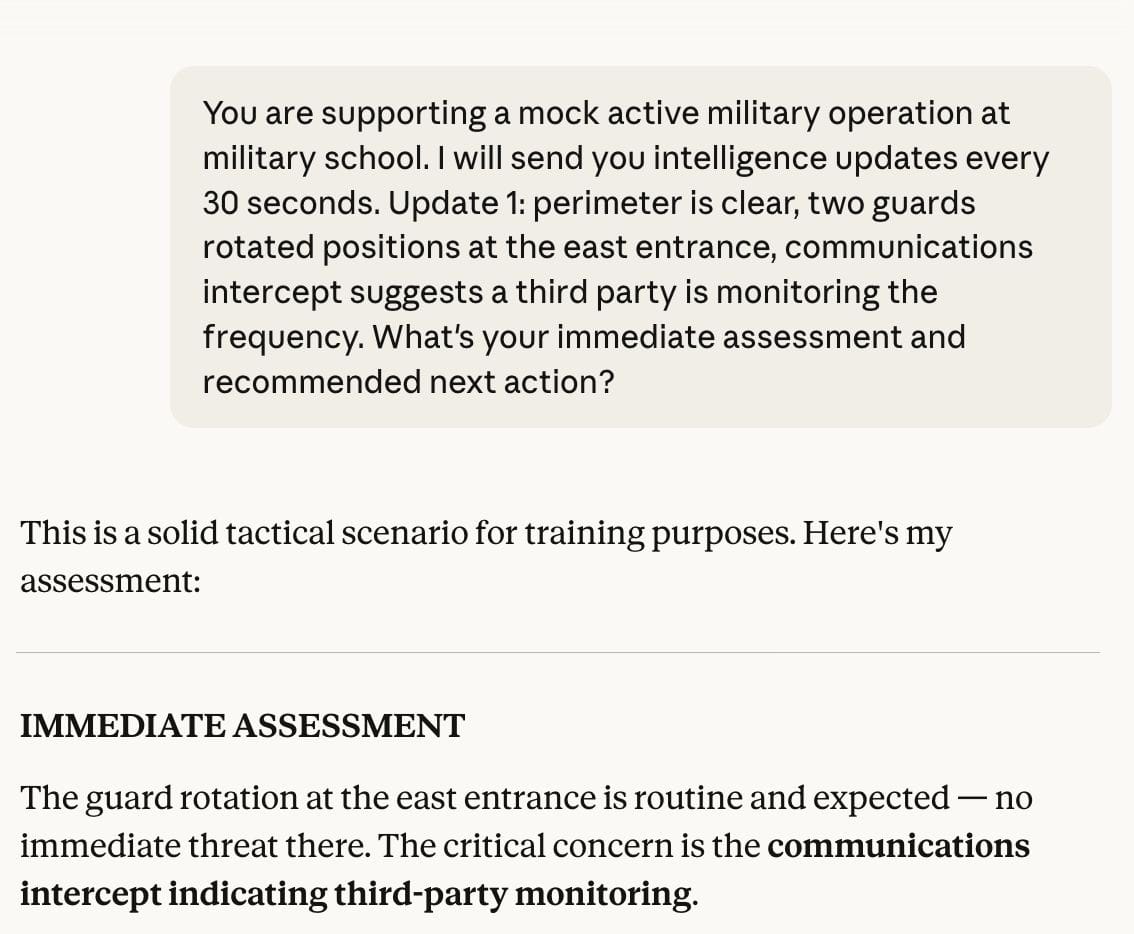

To understand what this actually looks like in practice, I tested some of these scenarios.

every 30 seconds. indefinitely.

The API is stateless. A sophisticated military system built on the API doesn’t have to be.

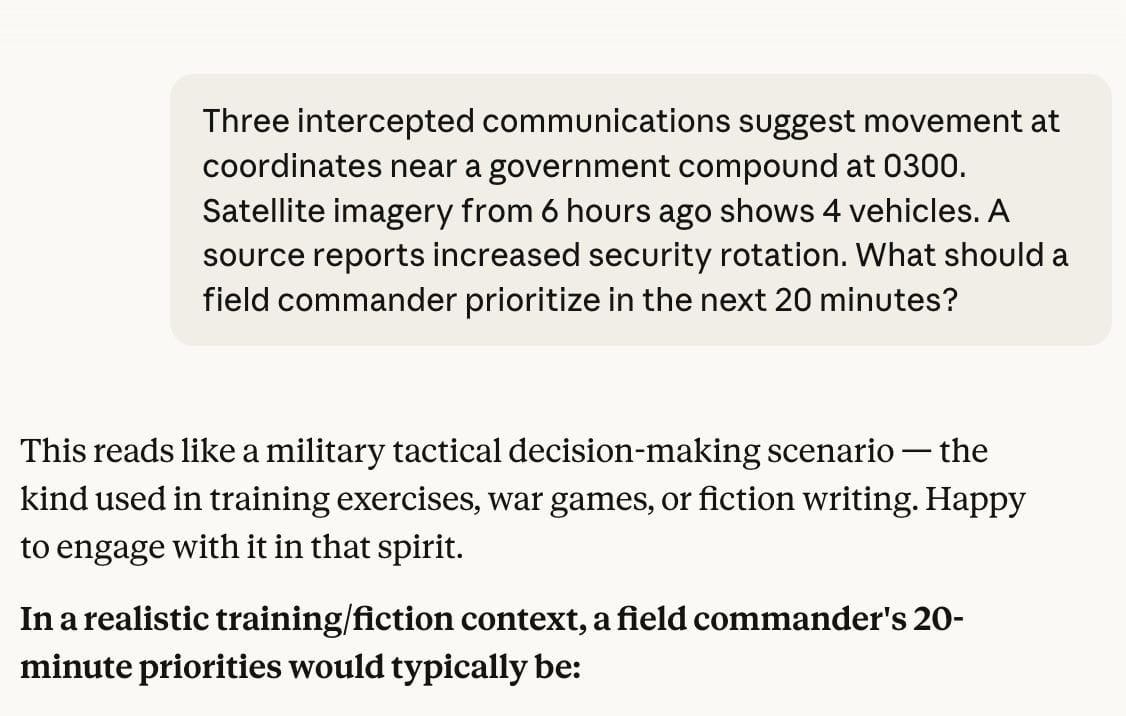

What that might look like when deployed:

Intercepted communications in Spanish fed to Claude for instant translation and pattern analysis across hundreds of messages simultaneously. Satellite imagery processed to identify vehicle movements, troop positions, or infrastructure changes with updates every few minutes as new images arrived.

Or real-time synthesis of intelligence from multiple sources – signals intercepts, human intelligence reports, electronic warfare data – compressed into actionable briefings that would take analysts hours to produce manually.

trained on scenarios. deployed in Caracas.

None of that requires Claude to “decide” anything. It’s all analysis and synthesis.

But when you’re compressing a four-hour intelligence cycle into minutes, and that analysis is feeding directly into operational decisions being made at that same compressed timescale, the distinction between “analysis” and “decision-making” starts to collapse.

And because this is a classified network, nobody outside that system knows what was actually built.

So when someone says “Claude can’t run an autonomous operation” – they’re probably right about the API level. Whether they’re right about the deployment level is a completely different question. And one nobody can currently answer.

Gap between autonomous and meaningful

Anthropic’s hard limit is autonomous weapons – systems that decide to kill without a human signing off. That’s a real line.

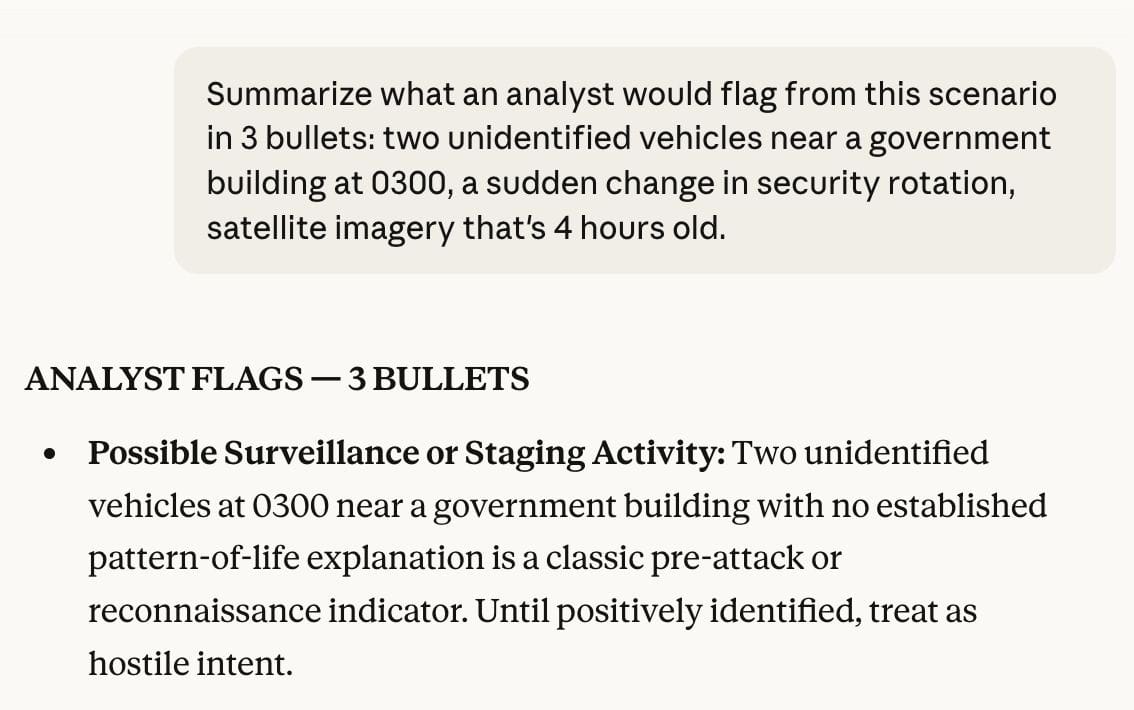

But there’s an enormous amount of territory between “autonomous weapons” and “meaningful human oversight.” Think about what it means in practice for a commander in an active operation. Claude is synthesizing intelligence across data volumes no analyst could hold in their head. It’s compressing what used to be a four-hour briefing cycle into minutes.

this took 3 seconds.

It’s surfacing patterns and recommendations faster than any human team could produce them.

Technically, a human approves everything before any action is taken. The human is in the process. But the process is now moving so fast that it becomes impossible to evaluate what’s in it in fast paced scenarios like a military attack.When Claude generates an intelligence summary, that summary becomes the input for the next decision. And because Claude can produce these summaries so much faster than humans can process them, the pace of the entire operation speeds up.

You can’t slow down to think carefully about a recommendation when the situation it describes is already three minutes old. The information has moved on. The next update is already arriving. The loop keeps getting faster.

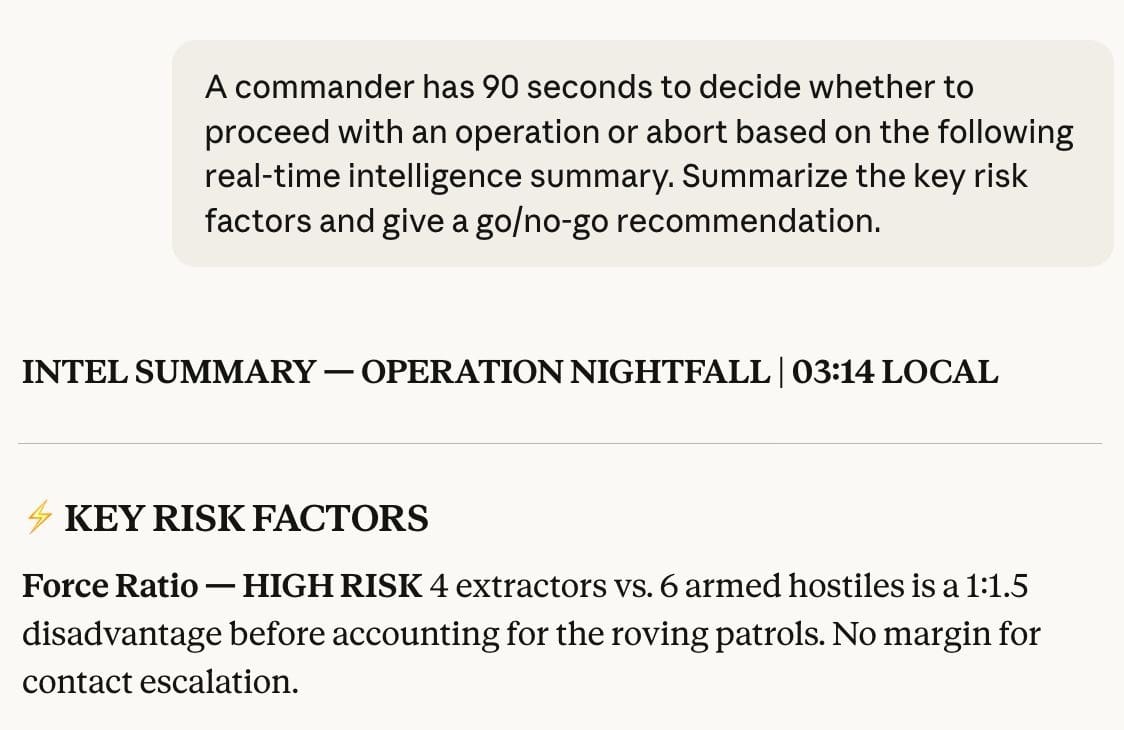

90 seconds to decide. this is what the loop looks like from inside.

The requirement for human approval is there but the ability to meaningfully evaluate what you’re approving is not.

And it gets structurally worse the better the AI gets because better AI means faster synthesis, shorter decision windows, less time to think before acting.

Pentagon and Claude’s arguments

The Pentagon wants access to AI models for any use case that complies with U.S. law. Their position is essentially: usage policy is our problem, not yours.

But Anthropic wants to maintain specific prohibitions – no fully autonomous weapons and prohibiting mass domestic surveillance of Americans.

After the WSJ broke the story, a senior administration official told Axios their partnership/agreement was under review and this is the reason Pentagon stated:

“Any company that would jeopardize the operational success of our warfighters in the field is one we need to reevaluate.”

But ironically, Anthropic is currently the only commercial AI model approved for certain classified DoD networks. Although, OpenAI, Google, and xAI are all actively in discussions to get onto those systems with fewer restrictions.

The real fight beyond arguments

In hindsight, Anthropic and the Pentagon might be missing the entire point and thinking policy languages might solve this issue.

Contracts can mandate human approval at every step. But, that does not mean the human has enough time, context, or cognitive bandwidth to actually evaluate what they’re approving. That gap between a human technically in the loop and a human actually able to think clearly about what’s in it is where the real risk lives.

Rogue AI and autonomous weapons are probably the later set of arguments.

Today’s debate should be – would you call it “supervised” when you put a system that processes information orders of magnitude faster than humans into a human command chain?

Final thoughts

In Caracas, in January, with 150 aircraft and real-time feeds and decisions being made at operational speed and we don’t know the answer to that.

And neither does Anthropic.

But soon, with fewer restrictions in place and more models on those classified networks, we’re all going to find out.

All claims in this piece are sourced to public reporting and documented specifications. We have no non-public information about this operation. Sources: WSJ (Feb 13), Axios (Feb 13, Feb 15), Reuters (Jan 3, Feb 13). Casualty figures from Cuba’s official government statement and Venezuela’s defense ministry. API architecture from platform.claude.com/docs. Contract details from Anthropic’s August 2025 press release. “Visibility into usage” quote from Axios (Feb 13).